When I started my blog, I thought a lot about managing my posts. After much thought and exploration, I decided to use the Notion API. I’ve been using Notion for several years, and it’s very easy to edit text, including code, images, and other assets in various formats.

With Notion API, I can interact with pages and easily retrieve data from the pages in rich text format.

The problem was that the first fetch for each blog page always takes a couple of seconds. The data returned very fast from the second request since it got cached by the server.

However, the first fetch was always slow, so I had to watch the paw-loading for a few seconds, no matter how heavy the content was.

So, I decided to use AWS products to optimize the speed.

- page titles for showing the list on the main blog page in JSON format

- blocks for each child page in JSON format

I used S3 to store the titles and each page’s data.

Since the data from Notion API contains much detailed information, I had to filter and reformat the data before saving it.

My first idea was creating the Lambda function to create and update files on S3. However, this idea couldn’t be perfect since Notion does not currently provide webhooks to detect events when page content is updated.

So, I created a feature using AWS EventBridge as an alternative. The goal was to use EventBridge to trigger a lambda function at regular intervals to fetch information on child pages.

By comparing the last update time of each page with previously stored information, I aimed to update only the pages where changes had occurred.

First, I created a function to update all the data at once. Using @aws-sdk/client-s3 and @notionhq/client, I made a handler to store titles in the titles.json file and each page’s data in the file named with its id.

After testing the function, I found out it’s not very efficient since the execution time takes takes longer than expected since the code had to request data from Notion for the number of child pages plus one for the titles.

Also, it’s not cost-effective since the Lambda function costs depending on the execution duration.

This time, I decided to update files manually using S3 SDK. This method takes a bit more effort, but it's a way to update the data stored on S3 by running the script whenever there's an update on a Notion page that I want to reflect on my webpage.

By doing this, since I'm not using EventBridge and Lambda, I was able to save on the costs associated with the time it takes to request data from Notion and format the response.

The code is almost same as above, but to interact with S3 buckets, I needed to create Cognito indentity pools. With this tool, I can limit the role to interacting with the S3 buckets only.

Finally, I stored all the data to the S3 bucket, and the next step was to configure CloudFront and Route53.

Since I wanted to get the data using CDN, I set to block all public access to the S3 bucket. Then, I assigned the alternative domain name to the CloudFront distribution.

To access the Notion data bucket, that distribution needs to be given permission. When you set the origin for the distribution to the bucket, it generates the policy to grant access to the S3, and you can paste it into the bucket policy. Since I set the route for the Notion data to https://blogs.tokozing.com, I created a record for the subdomain as CNAME on Route53.

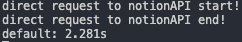

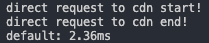

After finishing all the setups, I finally compared the difference in loading times between data received through CDN and data directly from Notion. I measured the time to receive the data for rendering from the moment the page was accessed using console.time() and console.timeEnd().

The result showed a significant difference!🙌

Request to Notion directly

Request to CDN

When I checked the site after deployment, I found that pages with more data still took some time due to the time for rendering the page on the server, but it was reduced compared to before, and the difference was noticeable.